The previous four sections of this blog series have dealt with the characteristics of the digital thread itself and the modeling process. Verification deals with characteristics of the real system that the digital thread models, primarily its performance against requirements. Assuming the results of physical testing, simulation, and analysis are captured within the digital thread, we can design algorithms to trace the connections between requirements and results and extract data from the appropriate results container. To the extent that requirements are not linked to passing test results, we have a very useful measure of the project risk remaining at that point in the development project.

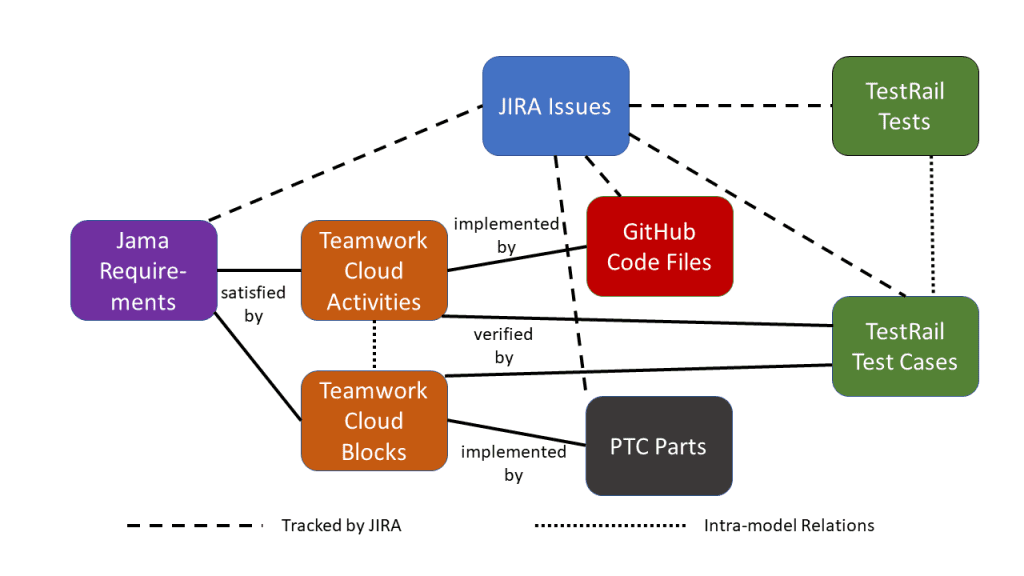

Verification may be complicated when the connection between requirements and test results is not direct. This depends on the digital thread schema. In the example we have been using, shown in Figure 1, requirements held in Jama are connected over the project lifecycle to tasks in JIRA, which are connected to test cases and test results in TestRail. These inter-model connections are maintained by Syndeia; there are also intra-model relations between test cases and test results maintained by TestRail, which may also become part of the digital thread.

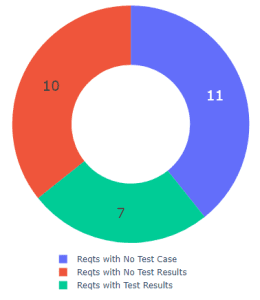

The first relevant query is how many requirements are connected to test cases and to tests. If we confine our query to inter-model connections only, this query is directed to the Syndeia Cloud graph database, which maintains these connections. Figure 2 is the combination of three graph queries:

- How many requirements in Jama are part of a particular digital thread, the Syndeia project UGV02?

- How many of these requirements are connected to test cases in TestRail?

- How many of these are connected to tests (test results) in TestRail?

The results provide a snapshot of the current status of the testing program:

- 11 requirements do not yet have a test case identified

- 10 requirements have an associated test case but no test results yet, and

- 7 requirements have associated test results.

The digital thread is constantly evolving. The inter-model connections stored by Syndeia are configuration-managed and version-sensitive. The graph analysis queries used in Figure 2 are formulated so that only the latest versions of the connections are returned. Syndeia maintains a record of connections to earlier versions of the requirements and test results, but these are not included in the report.

A second subtlety of the metric is that only requirements already part of this particular digital thread, e.g. connected to JIRA or Teamwork Cloud, are considered, because these are the only requirements recorded in the Syndeia Cloud graph database (and then only with minimal meta-data). If we wanted to extend the critical metric analysis to a larger set of requirements in Jama, e.g. all requirements in a specific Jama requirement project, we would need to query the Jama repository directly. Syndeia gives us this capability by providing API endpoints to query each of the associated repositories, Jama, JIRA, TestRail and others, via the Syndeia Cloud REST API.

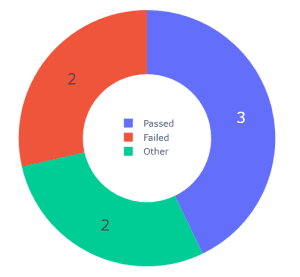

We can use this same set of capabilities to extract information about the test results. Having identified the seven relevant tests in the previous analysis, we can read the test status attribute from each of the tests.

Figure 3 shows the results of these queries

- 3 tests are marked Passed,

- 2 tests are marked Failed, and

- 2 test are marked Other, e.g. scheduled for retest.

Overall, only 2 out of the 28 Jama requirements in the UGV02 digital thread project are currently verified, indicating that the project Risk remains quite high.

Instead of linking to a test management tool where pass/fail attributes are readily available, the Syndeia user could link the requirements directly to files containing data from simulation or analysis tools. For example, Syndeia has an integration to Esteco VOLTA which allows it to view data within that simulation modeling repository. It also has the capability of reading data from XML, JSON or CSV files. However, parsing that data to determine pass/fail against a specific requirement might require additional coding within the critical metrics script.

In that regard, it’s worth noting that Syndeia’s concept of digital threads does not cover connection and execution of multiple simulation models to generate verification results. There are a number of such simulation orchestration platforms, from Esteco, Ansys, Dassault Systemes and others, which are very important in the “digital twin” domain.

Parts 3 through 7 has sought to show how critical metrics might look in a digital engineering development environment. In Part 8, we look at how these reports can be generated and stored on an automated basis and viewed by interested parties over the organizational network, using standard DevOps tools.

For more blogs in the series:

- Critical Metrics for Digital Threads, Part 1

- Critical Metrics for Digital Threads, Part 2

- Critical Metrics for Digital Threads, Part 3

- Critical Metrics for Digital Threads, Part 4

- Critical Metrics for Digital Threads, Part 5

- Critical Metrics for Digital Threads, Part 6

- Critical Metrics for Digital Threads, Part 7 (This Part)

- Critical Metrics for Digital Threads, Part 8

- Critical Metrics for Digital Threads, Part 9

Intercax

Intercax Intercax

Intercax